Artificial intelligence (AI) technology has changed the delivery of traditional financial services, offering opportunities to boost revenue while reducing costs. However, its advancement comes with new challenges and risks, particularly in relation to complying with existing laws that are often archaic in nature and fail to provide a conducive environment for such technologies.

Nonetheless, it is important to understand the regulatory intricacies before implementing AI in your business. This article will analyze the use of artificial intelligence in the context of consumer finance and responsible lending obligations.

Responsible Lending

If you provide or otherwise assist consumers in obtaining or increasing the limit of a credit product provided to individuals and strata corporations for personal, domestic, and household purposes or the purchase or improvement of residential investment property, then the responsible lending obligations will apply to you.

The responsible lending obligations are provided under Chapter 3 of the National Consumer Credit Protection Act 2009 (Cth) (NCCPA). Generally, they prohibit credit licensees from entering into or assisting a consumer with a credit product that is not suitable for them.

"The responsible lending obligations under the National Consumer Credit Protection Act 2009 (NCCP) that apply to the credit providers (lenders) & credit assistance providers (broker) marked a new era of the professionalism in the finance industry." pic.twitter.com/Q0taFac7dY

— Outsolu (@OutsoluNepal) September 1, 2020

The NCCPA requires providers of credit assistance and credit to make an assessment as to whether the credit contract will be unsuitable for the consumer if the contract is entered into or the credit limit is increased. Before making the assessment, the provider is required to:

- Make reasonable inquiries about the consumer's requirements and objectives regarding the credit product.

- Make reasonable inquiries about the consumer's financial situation.

- Take steps to verify the consumer's financial situation.

There are certain circumstances in which an application for a credit product or an increase to a credit limit must be assessed as unsuitable. This includes if, at the time of the assessment, it is likely that:

- The consumer will be unable to comply with their financial obligations under the credit product, such as not being able to meet repayments when they are due.

- The consumer will only be able to meet their financial obligations under the credit product with substantial hardship.

- The credit product will not meet the consumer's requirements or objectives.

There are other scenarios in which you may determine that an increase in a credit product or credit limit is unsuitable and decline some applications.

Challenges

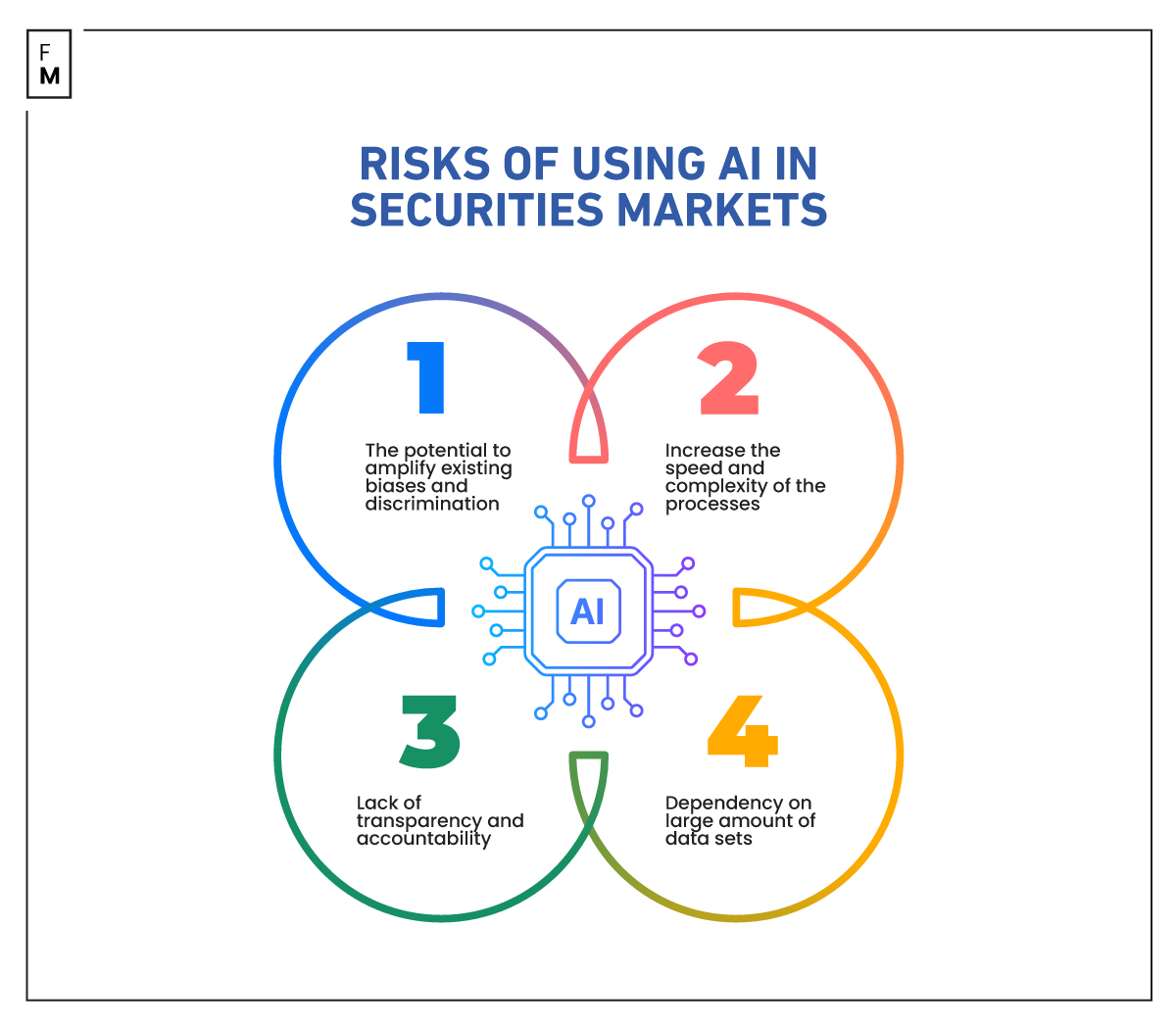

AI technologies are increasingly being used in the application approval process to assess unsuitability. However, the challenge to using AI is the potential for the data to be skewed, creating inherent discrimination. To overcome this hurdle, AI technology needs to be based on transparent models that recognize and address the potential for prejudice.

If you intend to rely on this technology, you should be able to explain to consumers how the outcome of the AI technology is attained and how it is consistent with your responsible lending obligations.

Continuous testing and monitoring of the technology are requisites to mitigate the likelihood of new biases emerging and to appropriately redress such biases or inaccuracies in a timely manner.

Robust data privacy and security measures are paramount to the reliability and integrity of AI technologies. Consumers should be able to trust that their data will be responsibly managed. For example, it may be appropriate to anonymize personally identifiable information while undertaking machine learning techniques or to otherwise include synthetic data.

It is important to be cautious of data intrusions and develop controls to identify and address such risks, such as restricting the control of algorithms.

Good governance in relation to AI technologies is more likely to facilitate a smooth integration into the business. There needs to be an appropriate allocation of human and financial resources, together with clear lines of responsibility and accountability. A decision framework for AI centered upon responsible lending obligations, together with formal training programs, will translate into trusted and responsible use of AI.

#AI regulation in the #UK: a path to good #governance and global leadership? https://t.co/4ul65KC5pd✍️@HuwJRoberts1 @jessRmorley @Floridi @RosariaTaddeo v @PolicyR @sallyeaves @nigewillson @mvollmer1 @Shi4Tech @enilev @SusanHayes_ @BetaMoroney @tlloydjones @danfiehn @Corix_JC pic.twitter.com/leUTrGvLxi

— BusinessIntelligence (@bimedotcom) May 26, 2023

Ultimately, as we continue to transition to a digital economy, it is pivotal that the use of AI technology in consumer finance focuses on: ·

- transparency so that the reasons for an assessment of unsuitability can be explained to consumers, ·

- controlled implementation so that data privacy and security can be maintained while also ensuring any biases are corrected for outcomes that are consistent with responsible lending obligations, and

- accountability to encourage trust and confidence in AI, including the decision-making behind such technology.