The European Central Bank (ECB) has highlighted the need to monitor the use of artificial intelligence (AI) in the financial sector closely and suggested that regulatory initiatives may be necessary to address potential market failures.

ECB Calls for Monitoring and Potential Regulation of AI in Finance

In an article published as part of its Financial Stability Review, the ECB acknowledged the opportunities presented by AI, such as improved information processing, enhanced customer service, and better detection of cyberthreats.

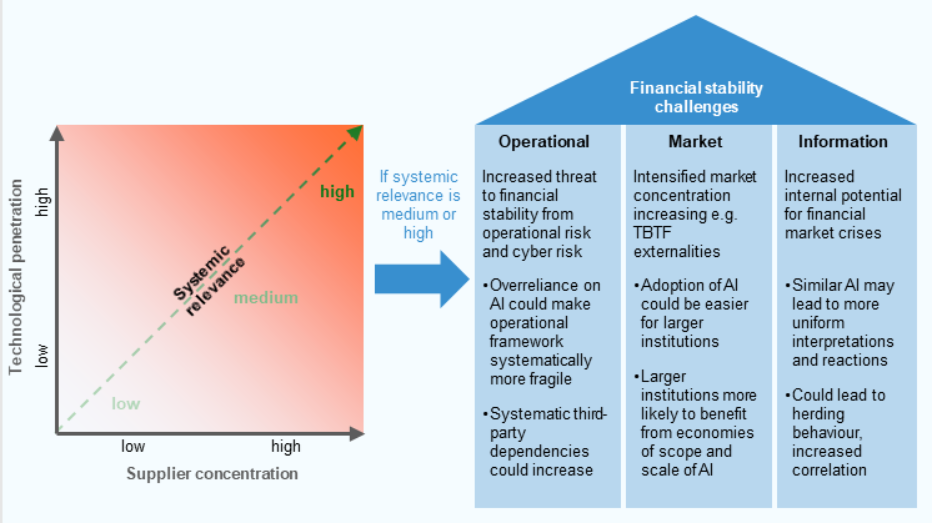

However, the central bank also cautioned about the risks associated with AI, including herding behavior, overreliance on a limited number of providers, and the possibility of more sophisticated cyberattacks.

“Should many institutions use AI for asset allocation and rely only on a few AI providers, for example, then supply and demand for financial assets may be distorted systematically, triggering costly adjustments in markets that harm their resilience,” the central bank warned.

While the adoption of AI systems by European financial companies is still in its early stages, the ECB emphasized the importance of monitoring the implementation of AI across the financial system as the technology evolves.

The central bank noted that the European Union has already formulated the world's first AI rules, which impose specific transparency obligations and copyright laws on general-purpose and high-risk AI systems.

Despite these measures, the ECB suggested that additional regulatory initiatives may be necessary if market failures become apparent and cannot be addressed by the current prudential framework.

"Therefore, the implementation of AI across the financial system needs to be closely monitored as the technology evolves," the ECB said.

ECB aims to take action as the industry rapidly evolves. By 2030, the generative AI market in payments is projected to exceed $85 billion. Investors are leveraging AI applications for portfolio management, with assets under management (AUM) reaching between $2.2 and $3.7 trillion in 2020.

ECB Lists the Main Risks of AI

The ECB's call for monitoring and potential regulation of AI in finance comes amid growing concerns about AI's impact on various industries, including the financial sector. The central bank has identified several potential benefits and risks associated with the use of artificial intelligence in the financial sector.

AI enhances data processing and generation, allowing for the analysis of unstructured data such as text, voice, and images. However, AI systems based on foundation models may be prone to data quality issues, as they can "learn" and sustain biases or errors inherent in the training data. Additionally, data privacy concerns arise regarding the respect for user input data privacy and the potential for data leakage.

“AI models are adaptable, flexible and scalable, but prone to bias, hallucination and greater complexity, which makes them less robust,” the ECB stated.

Deploying AI in new tasks and processes presents risks, as it is difficult to predict and control how AI will perform in practice. Furthermore, AI could be misused in harmful ways, such as by criminals fine-tuning it for cyberattacks, misinformation, or market manipulation.